Classifying Mood with Convolutional Neural Nets

Can my neural net detect how you feel?

Photo Credit1

Github Repository for this project can be found here.

ABOUT

With the smartphone functioning as an individual’s camera, it is easy for thousands of similar photos to pile up. A system that recognizes smiles or “happier” images could suggest which photos to keep and which to discard from a group of similar photos.

With this in mind, I aim to classify photos into two categories; happy or sad; using a Convolutional Neural Net.

DATA

The photos for this project have been scraped from Pexels, a site offering free stock photos.

There are 2,250 photos, about half “happy” and half “sad.” The photos were categorized using the Pexels tagging system. All happy photos were compiled from search results using the search term “happy” and all sad photos were compiled using the search term “sad.”

DESIGN

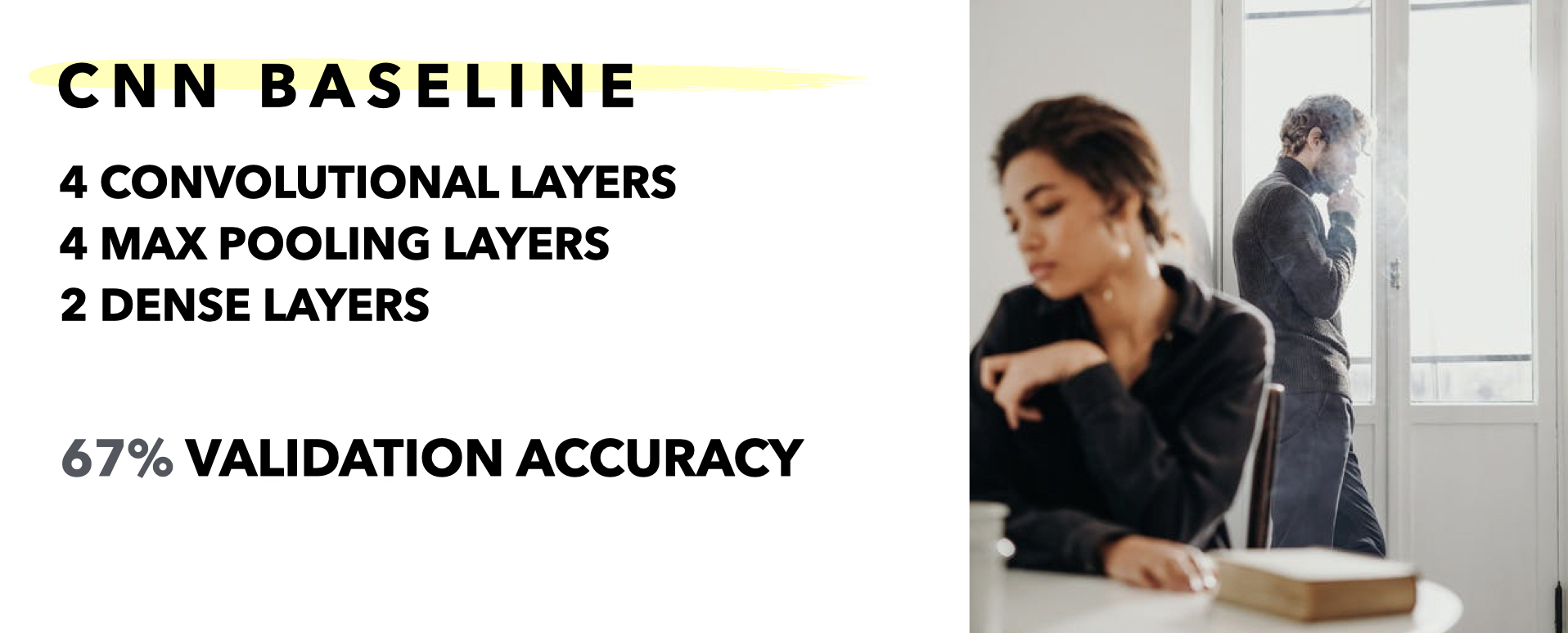

BASELINE MODEL

I trained a convolutional neural net from scratch on a relatively small data set. I used 500 samples for both classes in my training data, 300 for my validation set, and a little over 300 in my test set.

My baseline model from scratch achieved a validation accuracy of about 67%.

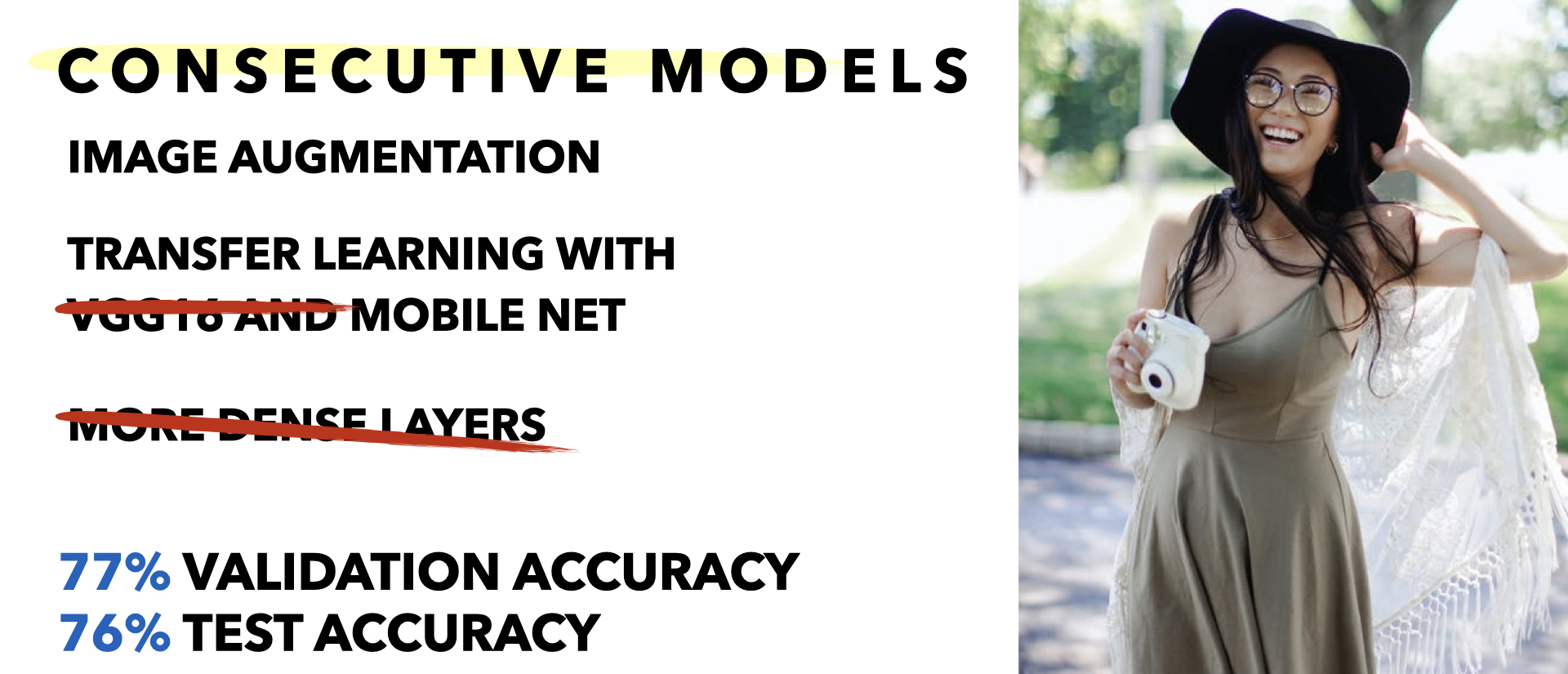

IMAGE AUGMENTATION

I then followed the process to augment more image data. I trained a new network using this data augmentation configuration and added a drop out layer.

The model generalizes a lot better and I was able to achieve an accuracy of 71%.

FINAL MODEL

My final CNN model used transfer learning, with frozen weights from MobileNet. I used the Adam optimizer and trained on a batch size of 20. I used early stopping to monitor the binary cross-entropy loss.

This model performed with 77% accuracy on my validation set and 76% accuracy on my test set.

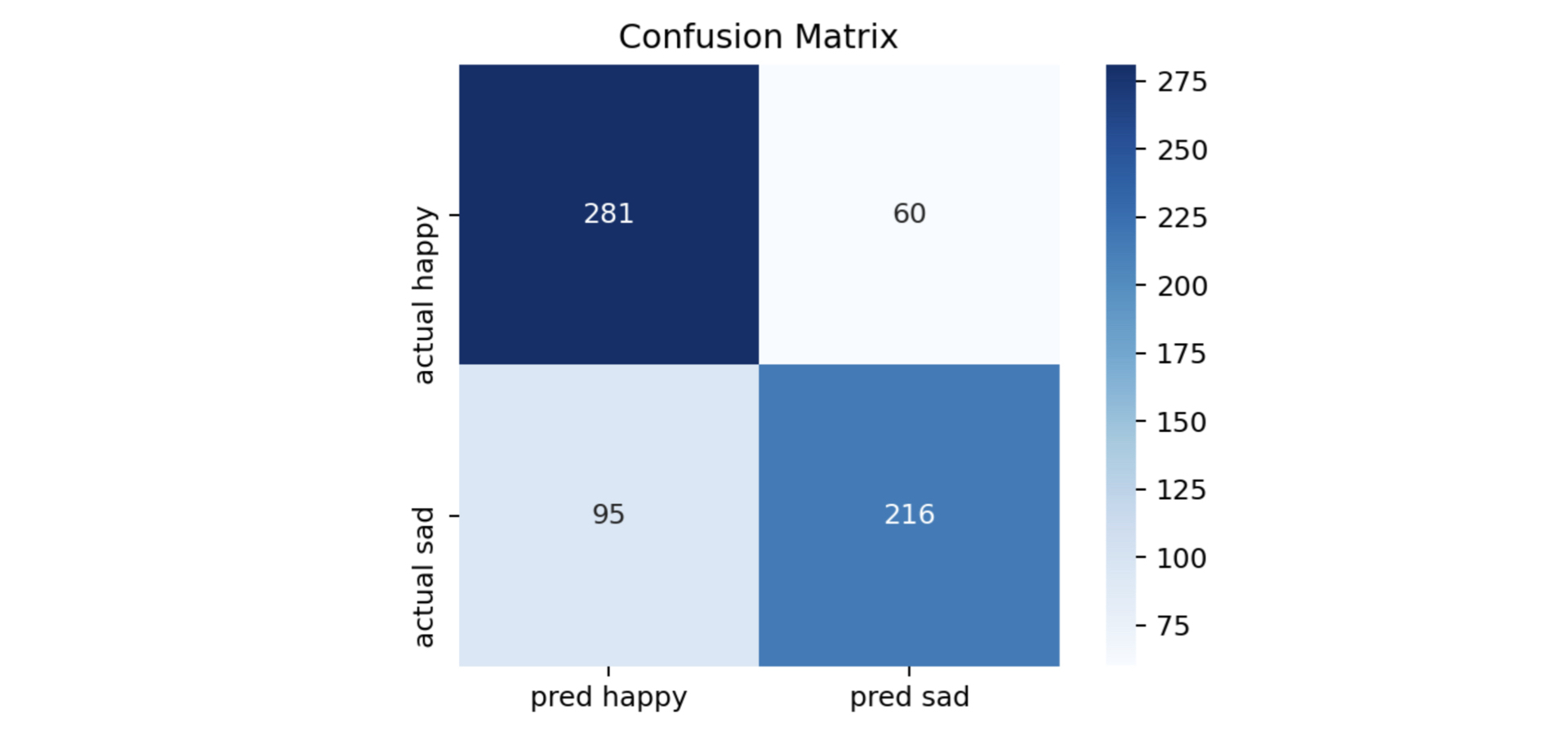

FINDINGS

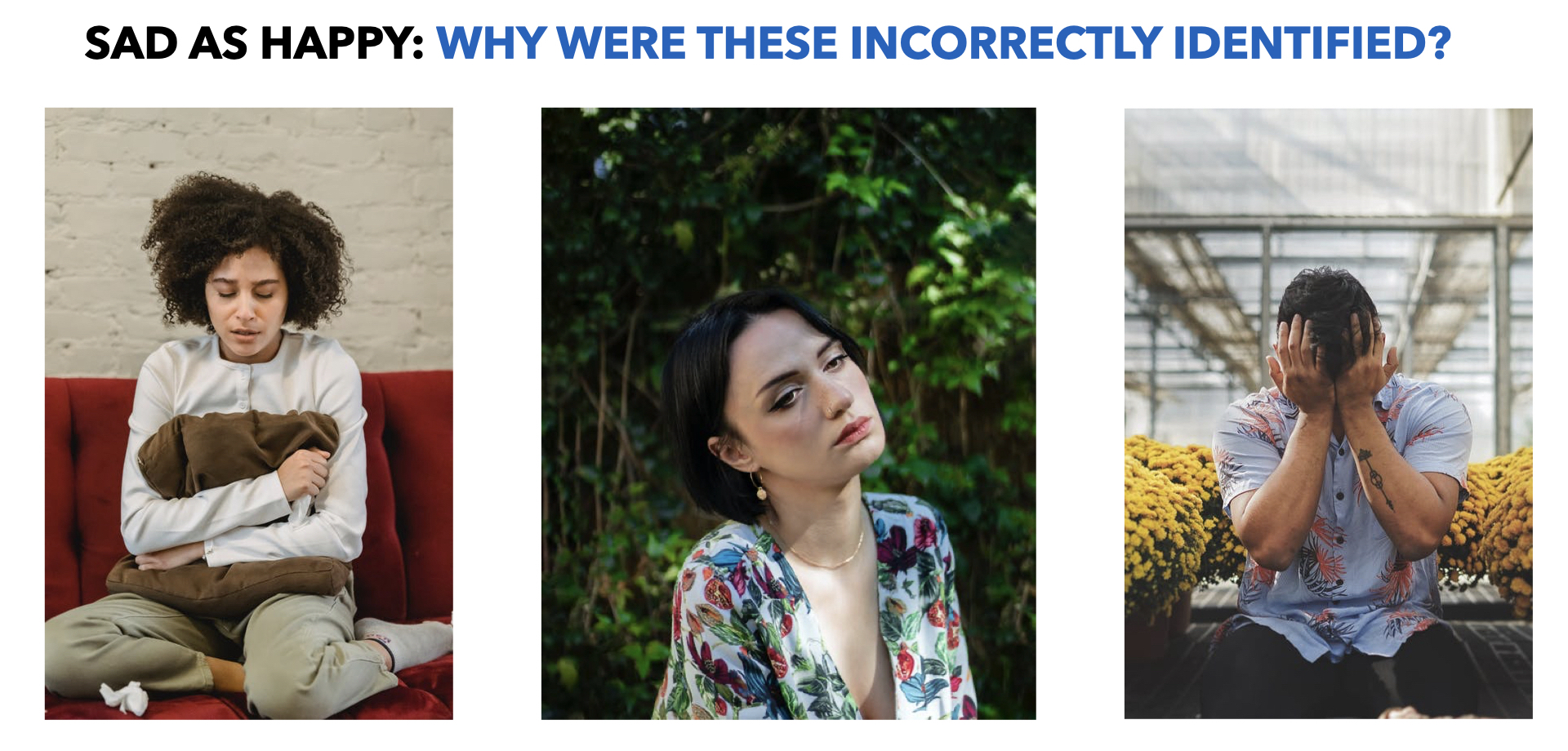

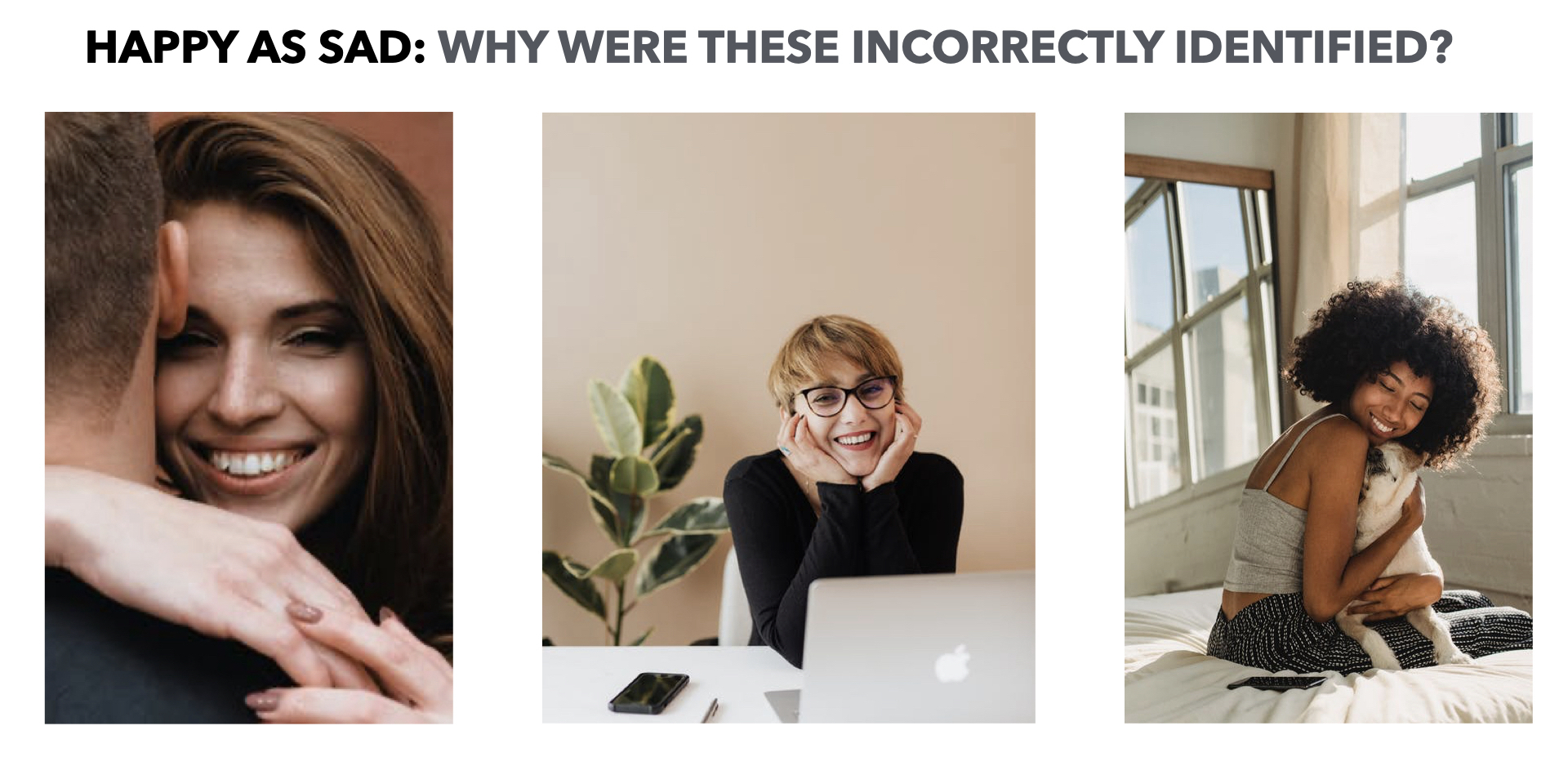

The model is slightly better at identifying “happy” photos and also incorrectly identified happy images as sad more often than sad images as happy.

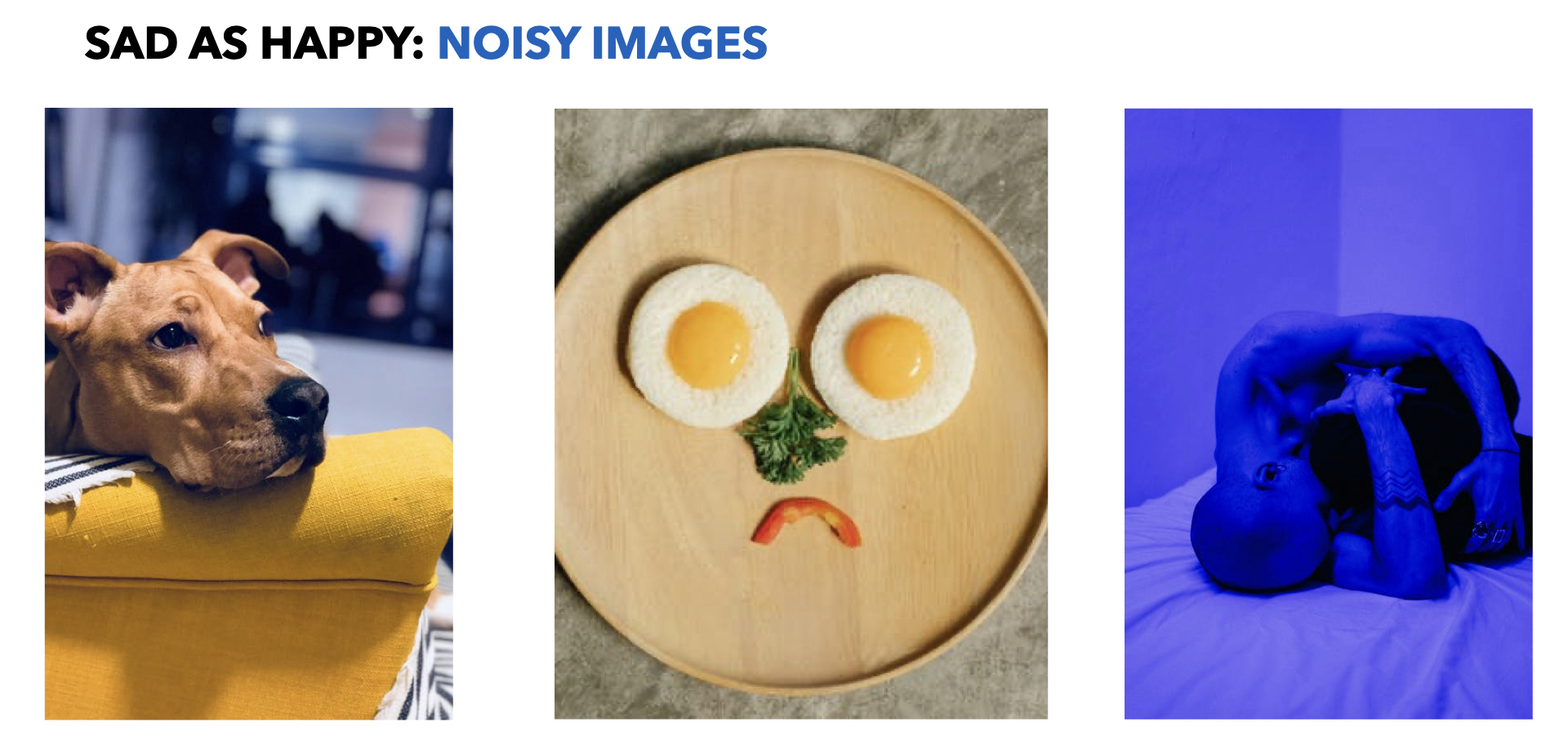

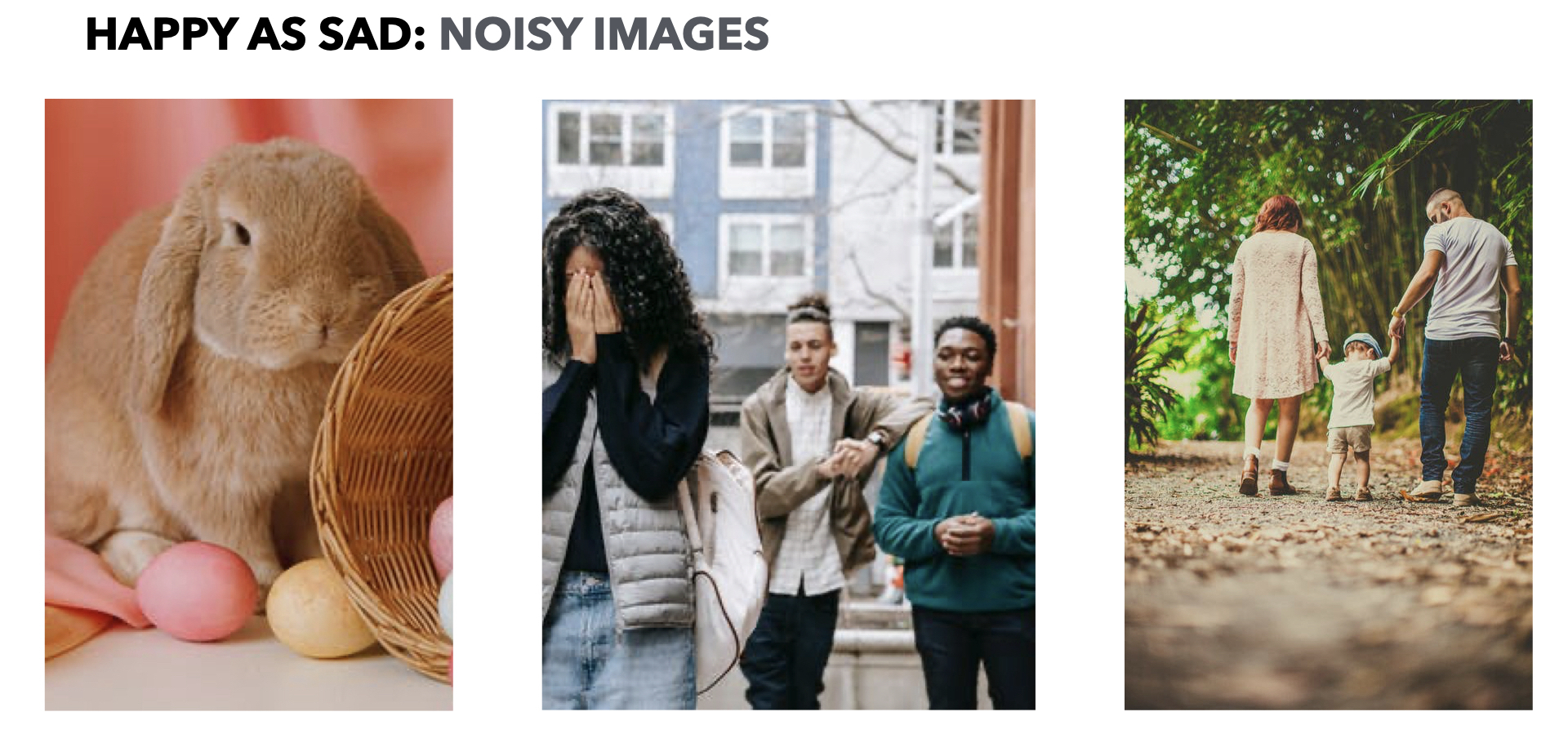

I combed through the incorrectly classified photos to see if I could notice any patterns or trends. There were both noisy images in both categories, as well as photos where it is not quite clear why the model incorrectly classified the images.

See some examples below:

FUTURE

I started to use a tool called tf-keras-vis to visualize the CNN activation for two of the MobileNet model classes (model I used for transfer learning). I would like to see the CNN activation on the photos for my class.

I plan to clean up the noisy images, add more images, and also use a library called OpenCV to isolate the faces in the photos.

I also hope to introduce multi-classification into this model framework.

-

Photo by Felix Mittermeier from Pexels ↩